Terry Tao’s most recent blog post looks at the Chowla conjecture theoretically. This post looks at the same conjecture empirically using Python. (Which is much easier!)

The Liouville function λ(n) is (-1)Ω(n) where Ω(n) is the number of prime factors of n counted with multiplicity. So, for example, Ω(9) = 2 because even though 9 has only one distinct prime factor, that factor counts twice.

Given some set of k strides h1, h2, …, hk, define

f(n) = λ(n + h1) λ(n + h1) … λ(n + hk).

The Chowla conjecture says that the average of the first N values of f(n) tends to zero as N goes to infinity. This has been proven for k = 1 but not for larger k.

If f(n) acts like a Bernoulli random variable, our experiments might increase our confidence in the conjecture, but they wouldn’t prove anything. Unexpected results wouldn’t prove anything either, but a departure from random behavior might help find a counterexample if the conjecture is false.

We’re going to be evaluating the Liouville function repeatedly at the same arguments, so it will save some compute time if we tabulate its values. This will also let us use some compact Python notation to average f. We’ll need to tabulate the function up to N + hk.

In the code below, maxstride is an upper bound on the strides hk we may look at. SymPy has a function primeomega that calculates Ω(n) so we might as well use it. If you wanted to use a very large value of N, you might want to fill the array of Liouville function values using a more direct approach that avoids all the factoring implicit in calls to primeomega.

from sympy import primeomega

from numpy import empty

N = 10000

maxstride = 100

liouville = empty(N + maxstride)

liouville[0] = 1

for n in range(1, len(liouville)):

liouville[n] = (-1)**primeomega(n)

The following code looks at the Chowla conjecture for h1 = 0 and h2 ranging over 1, 2, …, 99.

average = empty(maxstride-1)

for s in range(1, maxstride):

average[s-1] = (liouville[0:N] * liouville[s:N+s]).sum()/N

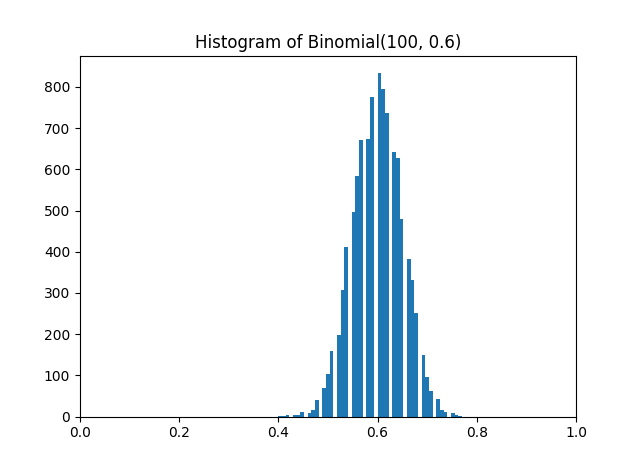

If the Liouville function is distributed like a random variable taking on -1 and 1 with equal probability, we’d expect the averages to vary like samples form a normal distribution with mean 0 and variance 1/(2N).

print(average.mean())

print(average.std())

print( (2*N)**-0.5 )

This returns

0.00141

0.00851

0.00707

and so the means are indeed near zero, and the standard deviation of the samples is about what we’d expect.

What if we replace Ω(n), the number of prime factors with multiplicity, with ω(n), the number of distinct prime factors? The averages above are still small, but the sample variance is about twice as big as before. I’ve seen this pattern with several different large values of N, so I suspect there’s something going on here.

(I didn’t see a function in SymPy corresponding to ω(n), but you can create your own with len(factorint(n)).