Someone with no exposure to probability or statistics likely has an intuitive sense that averaging random variables reduces variance, though they wouldn’t state it in those terms. They might, for example, agree that the average of several test grades gives a better assessment of a student than a single test grade. But data from a Cauchy distribution doesn’t behave this way.

Averages and scaling

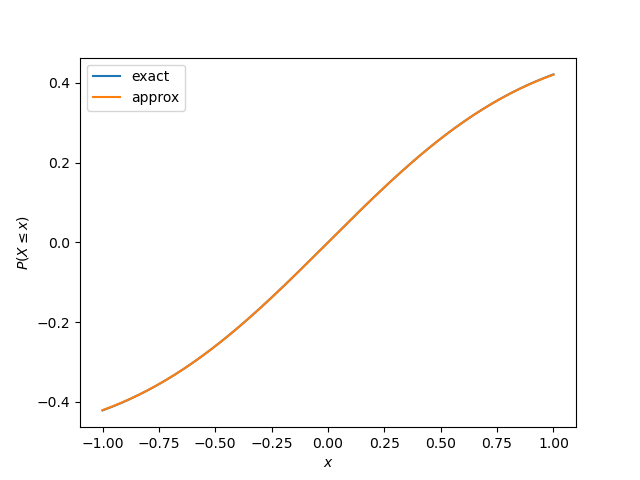

If you have four independent random variables, each normally distributed with the same scale parameter σ, then their average is also normally distributed but with scale parameter σ/2.

If you have four independent random variables, each Cauchy distributed with the same scale parameter σ, then their average is also Cauchy distributed but with exact same scale parameter σ.

So the normal distribution matches common intuition, but the Cauchy distribution does not.

In the case of random variables with a normal distribution, the scale parameter σ is also the standard deviation. In the case of random variables with a Cauchy distribution, the scale parameter σ is not the standard deviation because Cauchy random variables don’t have a variance, so they don’t have a standard deviation.

Modeling

Some people object that nothing really follows a Cauchy distribution because the Cauchy distribution has no mean or variance. But nothing really follows a normal distribution either. All probability distributions are idealizations. The question of any probability distribution is whether it adequately captures the aspect of reality it is being used to model.

Mean

Suppose some phenomenon appears to behave like it has a Cauchy distribution, with no mean. Alternately, suppose the phenomenon has a mean, but this mean is so variable that it is impossible to estimate. There’s no practical difference between the two.

Variance

And in the alternate case, suppose there is a finite variance, but the variance is so large that it is impossible to estimate. If you take the average of four observations, the result is still so variable that the variance is impossible to estimate. You’ve cut the theoretical variance in half, but that makes no difference. Again this is practically indistinguishable from a Cauchy distribution.

Truncating

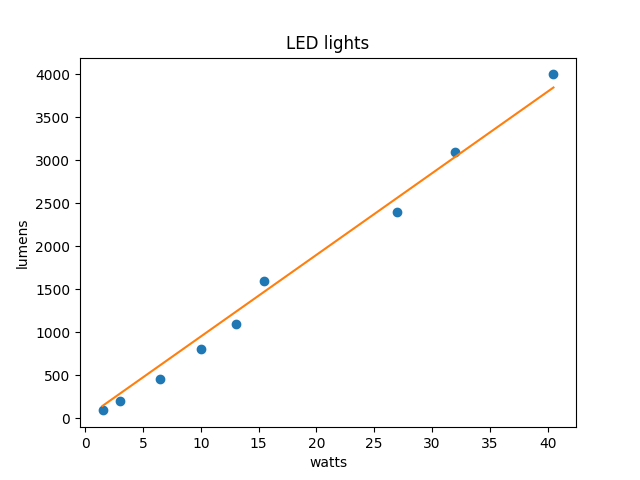

Now suppose you want to tame the Cauchy distribution by throwing out samples with absolute value less than M. Now you have a truncated Cauchy distribution, and it has finite mean and variance.

But how do you choose M? If you don’t have an objective reason to choose a particular value of M, you would hope that your choice doesn’t matter too much. And that would be the case for a thin-tailed probability distribution like the normal, but it’s not true of the Cauchy distribution.

The variance of the truncated distribution will be approximately equal to M, so by choosing M you choose the variance. So if you double your cutoff for outliers that are to be discarded, you approximately double the variance of what’s left. Your choice of M matters a great deal.

Related posts