Newton’s interpolation formula looks awfully complicated until you introduce the right notation. With the right notation, it looks like a Taylor series. Not only is this notation simpler and more memorable, it also suggests extensions.

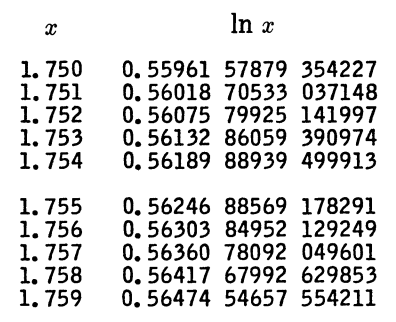

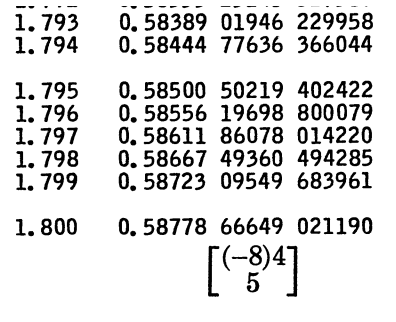

The notation we need comes in two parts. First, we need the forward difference operator Δ defined by

and its extension Δk defined by applying Δ to a function k times.

The other piece of notation we need is falling powers, denoted with a little bar under the exponent:

The Δ operator is meant to resemble the differential operator D and falling powers are meant to resemble ordinary powers. With this notation, Newton’s interpolation formula based at a

looks analogous to Taylor’s formula for a power series based at a

Newton’s formula applies to polynomials, and the infinite sum is actually a finite sum because Δk f(a) is 0 for all k beyond some point.

Newton’s formula is a discrete analog of Taylor’s formula because it only uses the values of f at discrete points, i.e. at the integers (shifted by a) and only involves finite operations: finite differences do not involve limits.

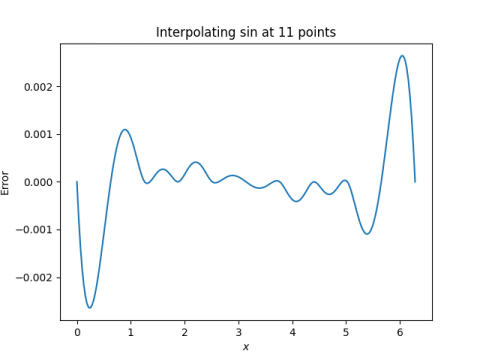

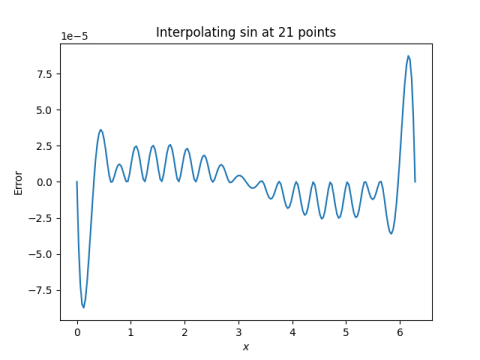

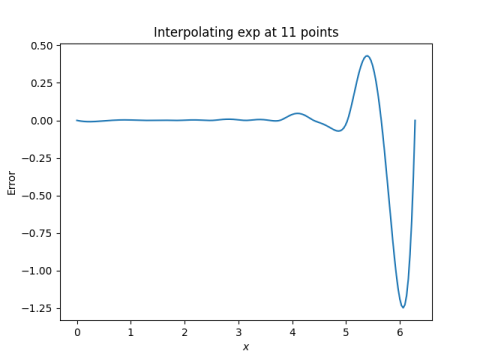

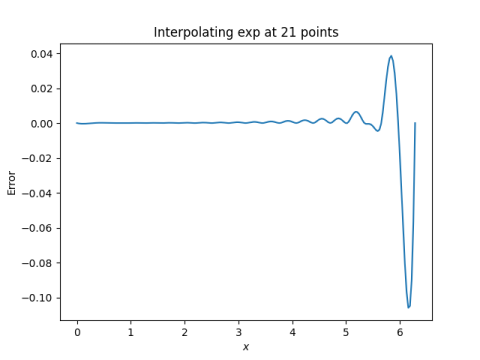

Convergence

As I mentioned on @AlgebraFact this morning,

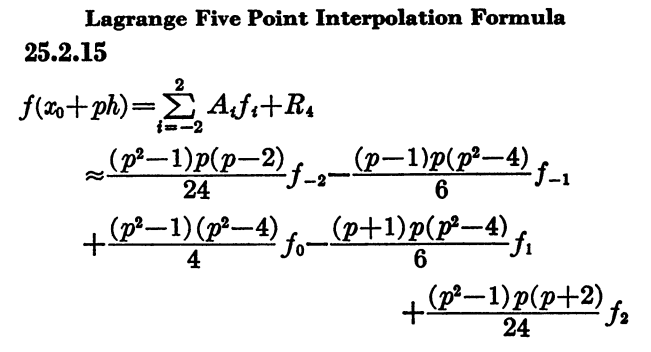

It’s often useful to write a finite series as an infinite series with a finite number of non-zero coefficients.

This eliminates the need to explicitly track the number of terms, and may suggest what to do next.

Writing Newton’s formula as an infinite series keeps us from having to write down one version for linear interpolation, another version for quadratic interpolation, another for cubic interpolation, etc. (It’s a good exercise to write out these special cases when you’re new to the topic, but then remember the infinite series version going forward.)

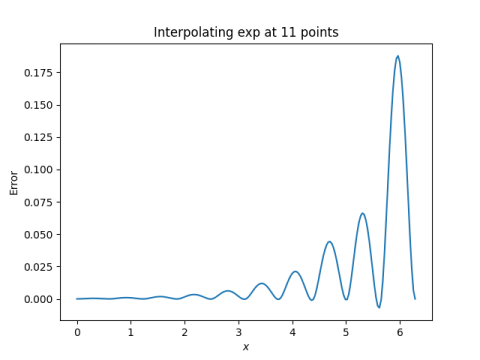

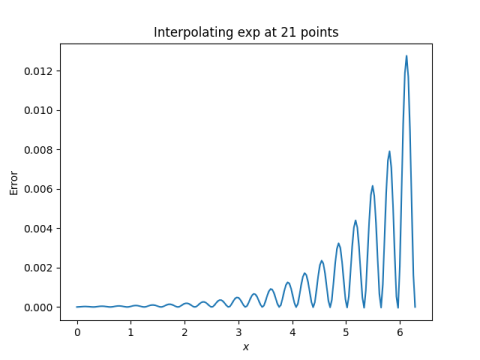

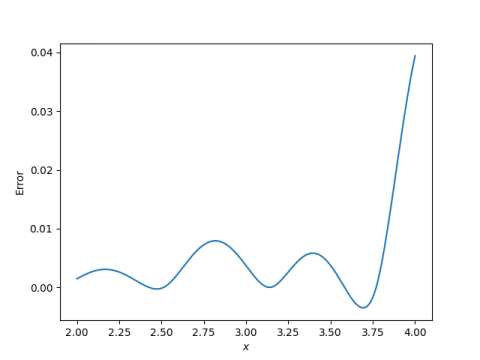

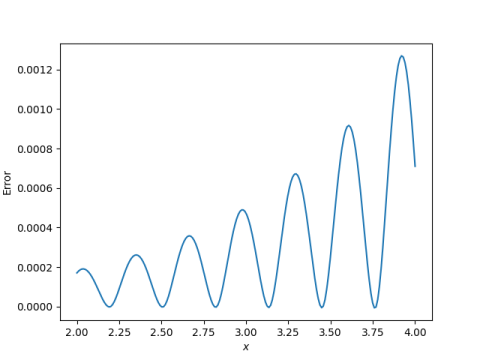

As for suggesting what to do next, it’s natural to explore what happens if the infinite series really is infinite, i.e. if f is not a polynomial. Under what circumstances does the series converge? If it does converge to something, does it necessarily converge to f(x) at each x?

The example f(x) = sin(πx) shows that Newton’s theorem can’t always hold, because for this function, with a = 0, the series on right hand side of Newton’s theorem is identically zero because all the Δk terms are zero. But Carlson’s theorem [1] essentially says that for an entire function that grows slower than sin(πx) along the imaginary axis the series in Newton’s theorem converges to f.

Saying that a function is “entire” means that it is analytic in the entire complex plane. This means that Taylor’s series above has to converge everywhere in order for Newton’s series to converge [2].

Related posts

[1] Carlson with no e. Not to be confused with Carleson’s theorem on the convergence of Fourier series.

[2] Carlson’s original theorem requires f to be entire. Later refinements show that it’s sufficient for f to be analytic in the open right half plane and continuous on the closed right half plane.