Often you have two lists, and you want to know what items belong to both lists, or which things belong to one list but not the other. The command line utility comm was written for this task.

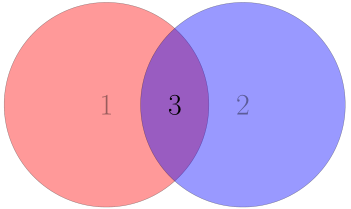

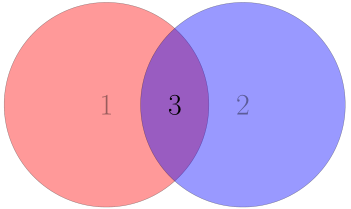

Given two files, A and B, comm returns its output in three columns:

- A – B

- B – A

- A ∩ B.

Here the minus sign means set difference, i.e. A – B is the set of things in A but not in B.

The numbering above will be correspond to command line options for comm. More on that shortly.

Difference and intersection

Here’s an example. Suppose we have a file states.txt containing a list of US states

Alabama

California

Georgia

Idaho

Virginia

and a file names.txt containing female names.

Charlotte

Della

Frances

Georgia

Margaret

Marilyn

Virginia

Then the command

comm states.txt names.txt

returns the following.

Alabama

California

Charlotte

Della

Frances

Georgia

Idaho

Margaret

Marilyn

Virginia

The first column is states which are not female names. The second column is female names which are not states. The third column is states which are also female names.

Filtering output

The output of comm is tab-separated. So you could pull out one of the columns by piping the output through cut, but you probably don’t want to do that for a couple reasons. First, you might get unwanted spaces. For example,

comm states.txt names.txt | cut -f 1

returns

Alabama

California

Idaho

Worse, if you ask cut to return the second column, you won’t get what you expect.

Alabama

California

Charlotte

Della

Frances

Idaho

Margaret

Marilyn

This is because although comm uses tabs, it doesn’t produce a typical tab-separated file.

The way to filter comm output is to tell comm which columns to suppress.

If you only want the first column, states that are not names, use the option -23, i.e. to select column 1, tell comm not to print columns 2 or 3. So

comm -23 states.txt names.txt

returns

Alabama

California

Idaho

with no blank lines. Similarly, if you just want column 2, use comm -13. And if you want the intersection, column 3, use comm -12.

Sorting

The comm utility assumes its input files are sorted. If they’re not, it will warn you.

If your files are not already sorted, you could sort them and send them to comm in a one-liner [1].

comm <(sort states.txt) <(sort names.txt)

Multisets

If your input files are truly sets, i.e. no item appears twice, comm will act as you expect. If you actually have a multiset, i.e. items may appear more than once, the output may surprise you at first, though on further reflection you may agree it does what you’d hope.

Suppose you have a file places.txt of places

Cincinnati

Cleveland

Washington

Washington

and a file of US Presidents.

Adams

Adams

Cleveland

Cleveland

Jefferson

Washington

Then

comm places.txt presidents.txt

produces the following output.

Adams

Adams

Cincinnati

Cleveland

Cleveland

Jefferson

Washington

Washington

Cleveland was on the list of presidents twice. (Grover Cleveland was the 22nd and the 24th president, the only US president to serve non-consecutive terms.) The output of comm lists Cleveland once in the intersection of places and presidents (say 22nd president and a city in Ohio), but also lists him once as a president not corresponding to a place. That is because there are two Clevelands on our list of presidents, there is only one in our list of places.

Suppose we had included five places named Cleveland (There are cities named Cleveland in several states). Then comm would list Cleveland 3 times as a place not a president, and 2 times as a place and a president.

In general, comm utility follows the mathematical conventions for multisets. Suppose an item x appears m times in multiset A, and n times in a multiset B. Then x appears max(m–n, 0) times in A–B, max(n–m, 0) times in B–A, and min(m, n) times in A ∩ B.

Union

To form the union of two files as multisets of lines, just combine them into one file, with duplicates. You can join file1 and file2 with cat (short for “concatenate”).

cat file1 file2 > multiset_union_file

To find the union of two files as sets, first find the union as multisets, then remove duplicates.

cat file1 file2 | sort | uniq > set_union_file

Note that uniq, like comm, assumes files are sorted.

Related

[1] The commutility is native to Unix-like systems, but has been ported to Windows. The examples in this post will work on Windows with ports of the necessary utilities, except for where we sort a file before sending it on to comm with <(sort file). That’s not a feature of sort or comm but of the shell, bash in my case. The Windows command line doesn’t support this syntax. (But bash ported to Windows would.)