The kernel of a linear transformation is the set of vectors mapped to 0. That’s a simple idea, and one you’ll find in every linear algebra textbook.

The cokernel is the dual of the kernel, but it’s much less commonly mentioned in textbooks. Sometimes the idea of a cokernel is there, but it’s not given that name.

Degrees of Freedom and Constrants

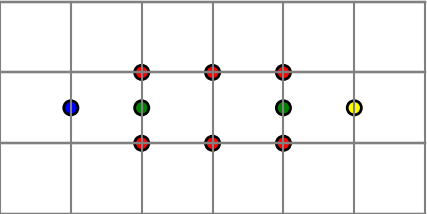

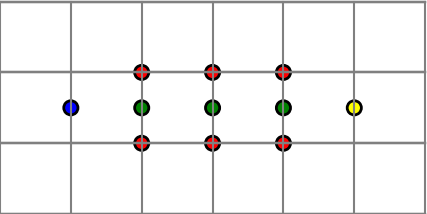

One way of thinking about kernel and cokernel is that the kernel represents degrees of freedom and the cokernel represents constraints.

Suppose you have a linear transformation T: V → W and you want to solve the equation Tx = b. If x is a solution and Tk = 0, then x + k is also a solution. You are free to add elements of the kernel of T to a solution.

If c is an element of W that is not in the image of T, then Tx = c has no solution, by definition. In order for Tx = b to have a solution, the vector b must not have any components in the subspace of W that is complementary to the image of T. This complementary space is the cokernel. The vector b must not have any component in the cokernel if Tx = b is to have a solution.

If W is an inner product space, we can define the cokernel as the orthogonal complement to the image of T.

Another way to think of the kernel and cokernel is that in the linear equation Ax = b, the kernel consists of degrees of freedom that can be added to x, and the cokernel consists of degrees of freedom that must be removed from b in order for there to be a solution.

Cokernel definitions

You may also see the cokernel defined as the quotient space W / image(T). This is not the same space as the complement of the image of T. The former is a subset of W and the latter is a new space. However, these two spaces are isomorphic. This is a little bit of foreshadowing: the most general idea of a cokernel will only hold up to isomorphism.

You may also see the cokernel defined as the kernel of the adjoint of T. This suggests where the name “cokernel” comes from: the dual of the kernel of an operator is the kernel of the dual of the operator.

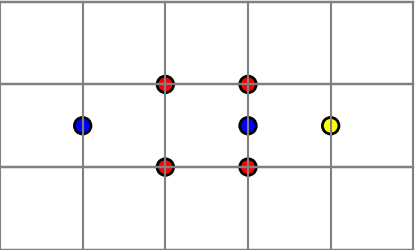

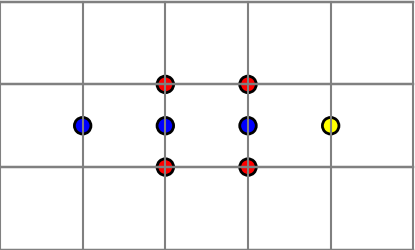

Kernel and cokernel dimensions

I mentioned above that there are multiple ways to define cokernel. They don’t all define the same space, but they define isomorphic spaces. And since isomorphic spaces have the same dimension, all definitions of cokernel give spaces with the same dimension.

There are several big theorems related to the dimensions of the kernel and cokernel. These are typically included in linear algebra textbooks, even those that don’t use the term “cokernel.” For example, the rank-nullity theorem can be stated without explicitly mentioning the cokernel, but it is equivalent to the following.

For a linear operator T: V → W ,

dim V − dim W = dim ker T − dim coker T.

When V or W are finite dimensional, both sides are well defined. Otherwise, the right side may be defined when the left side is not. For example, let V and W both be the space of functions analytic in the entire complex plane and let T be the operator that takes the second derivative. Then the left side is ∞ − ∞ but the right side is 2: the kernel is all functions of the form az + b and the cokernel is 0 because every analytic function has an antiderivative.

The right hand side of the equation above is the definition of the index of a linear operator. This is the subject of the next post.

Full generality

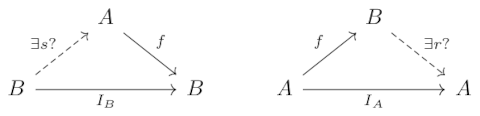

Up to this point the post has discussed kernels and cokernels in the context of linear algebra. But we could define the kernel and cokernel in contexts with less structure, such as groups, or more structure, such as Sobolev spaces. The most general definition is in terms of category theory.

Category theory makes the “co” in cokernel obvious. The cokernel will is the dual of the kernel in the same way that every thing in category is related to its co- thing: simply turn all the arrows around. This makes the definition of cokernel easier, but it makes the definition of kernel harder.

We can’t simply define the kernel as “the stuff that gets mapped to 0” because category has no way to look inside objects. We can only speak of objects in terms of how they interact with other objects in their category. There’s no direct way to define 0, much less things that map to 0. But we can define something that acts like 0 (initial objects), and things that act like maps to 0 (zero morphisms), if the category we’re working in contains such things; not all categories do.

For all the tedious details, see the nLab articles on kernel and cokernel.

Related posts

- Categorical products

- How linear algebra is different in infinite dimensions

- Visualizing a determinant identity