Suppose you want to find all the proper nouns in a document. You could grep for every word that starts with a capital letter with something like

grep '\b[A-Z]\w+'

but this would return the first word of each sentence in addition to the words you’re after.

You could grep for capitalized words that are not preceded by a period or question mark followed by a space.

grep -P '(?<![.?] )\b[A-Z]\w+'

That’s possibly better, but it misses proper nouns at the beginning of a sentence.

You might be able to accomplish what you’re after by tinkering with regular expressions, but it would be better to use a library that has some idea of what a proper noun is.

NLP with spaCy

The Python natural language processing library spaCy classifies words by part of speech, and so could in particular search for proper nouns.

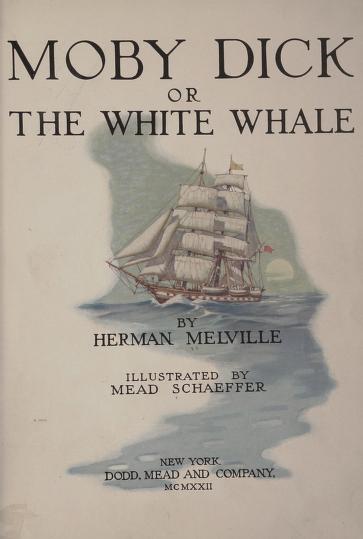

Here’s an example using the opening lines of Moby Dick.

import spacy

nlp = spacy.load("en_core_web_lg")

doc = nlp("Call me Ishmael. Some years ago—never mind how long precisely—having little or no money in my purse, and nothing particular to interest me on shore, I thought I would sail about a little and see the watery part of the world. Whenever I find myself growing grim about the mouth; whenever it is a damp, drizzly November in my soul ... I account it high time to get to sea as soon as I can.")

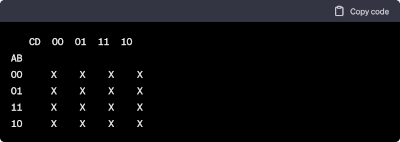

for tok in doc:

if tok.pos_ == "PROPN":

print(tok)

This will print Ishmael and November only. It does not print words at the beginning of a sentence such as Call or Some even though they are capitalized. When spaCy got to the the line

Queequeg was George Washington cannibalistically developed.

it detected that Queequeg is a proper noun. Presumably the model can tell this from context, because the word precedes the verb was and not because it knows Queeqeug is proper name.

When I changed November to november spaCy was still able to detect that november was a proper noun. When I downcased Ishmael it did not detect that ishmael was a proper noun, presumably because Ishmael is an uncommon name. When I changed the text to “Call me tim” the library did recognize tim as a proper noun.

When I fed spaCy the sentence

I never go as a passenger; nor, though I am something of a salt, do I ever go to sea as a Commodore, or a Captain, or a Cook.

the library thought that Commadore, Captain, and Cook were proper nouns. If I downcase these words, spaCy does not flag them as proper nouns.

When processing the line

For as in this world,head winds are far more prevalent than winds from astern (that is, if you never violate the Pythagorean maxim), so for the most part the Commodore on the quarter-deck gets his atmosphere at second hand from the sailors on the forecastle

spaCy correctly flagged Commodore as a proper noun in this instance. Also, it did not classify Pythagorean as a proper noun; the word is proper but not a noun, i.e. it’s a proper adjective.

TANSTAAFL

My script above has only six lines of code. But it depends on a library that uses a 588 MB language model. [1]

Related posts

[1] “TANSTAALF” stands for “There ain’t no such thing as a free lunch.” It comes from The Moon is a Harsh Mistress by Heinlein.

Incidentally, when I fed “The term TANSTAAFL comes from The Moon is a Harsh Mistress by Heinlein.” to spaCy, it flagged Harsh and Mistress as proper nouns.

When I fed it “The term TANSTAAFL comes from ‘The moon is a harsh mistress’ by Heinlein.” the library correctly tagged harsh as an adjective and mistress as a (non-proper) noun.