It’s fascinating that there’s such a thing as the World Jigsaw Puzzle Championship. The winning team of the two-person thousand-piece puzzle round can assemble a Ravensburger puzzle in less than an hour—that’s about 3 -1/2 seconds per piece.

It makes you wonder, how could you measure the hardness of a jigsaw puzzle? And what would a “hardest puzzle” look like?

You can find some puzzles that are claimed to be “hardest.” A first step to creating a hard puzzle is removing the front image entirely, eliminating visual cues. Some puzzles do this. This can be partly simulated with a normal puzzle by putting it together with the image side face down.

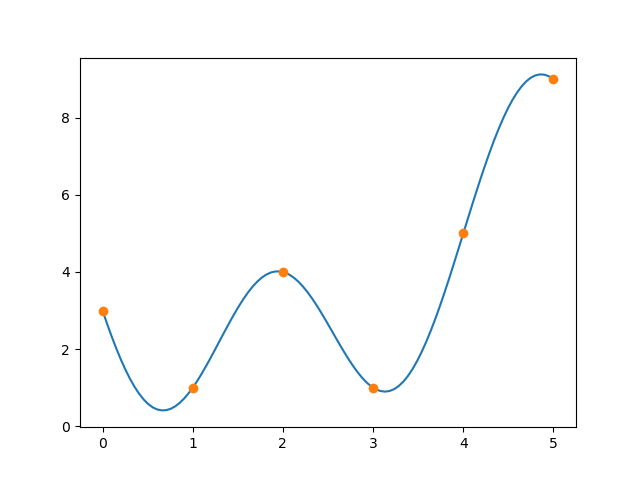

But you can do more: make every piece a square exactly the same size, and the “tab“ and “blank“ on each of the four sides of each square exactly the same size, shape and alignment. One can see that this gives you 24 = 16 different unique kinds of puzzle piece, however, due to symmetries you actually have six different kinds of puzzle piece. Now, you are left with a bare minimum of shape cues to help you assemble the puzzle.

For the sake of the argument, one can ignore the “edge” pieces. One can see this from the fact that for a rectangular puzzle, as you scale up the size, the number of edge pieces proportional to the interior pieces of the puzzle becomes smaller and smaller. For example for a 36X28=1008 piece puzzle, there are (2*36+2*28-4)=124 edge pieces, about 12 percent of the whole. The ratio shrinks as you scale up puzzle size. And there is such a thing as a 42,000-piece jigsaw puzzle.

The solution complexity class for assembling such a puzzle is known to be NP-complete. This means that the best known algorithms require compute time that grows exponentially as the number of pieces is increased.

Of course there are simpler special cases. Consider a checkerboard configuration, in which “black square“ location pieces have four tabs and “red square” locations have four blanks, thus only two different kinds of piece. A competent puzzler could assemble this one piece per second, 17 minutes for a 1000-piece puzzle.

However, as far as we know, the number of “special cases” like this is vanishing small for large puzzles. An intuition for this can be taken from Kolmogorov complexity theory, using a counting argument to show that only very few long strings can be compressed to a short program.

Most cases are really hard, for example, constructed by making a totally random assignment for selecting the configuration of each piece-to-piece boundary. Solving this is really hard because you may have to backtrack: you may have the puzzle largely complete, but due to ambiguities, you may need to backtrack because of a wrong decision made early.

A very weak upper bound in the assembly time can be derived by considering the brute force case. For a 1000-piece puzzle, there are 1000! (factorial) possible ways to (attempt to) assemble the puzzle. Suppose one places every piece and then fully backtracks for each trial—this gives 1000 x 1000! puzzle piece placement steps. Using Stirling’s approximation, this is approximately 104468 placement steps.

A human, assuming generously a rate of one step per second, at 31 million seconds/year nonstop, would take on the order of 104460 years. Assuming 10 billion simultaneous puzzlers—order 104450 years.

Now assume the on-record world’s fastest computer, the ORNL Frontier system (though see here), roughly 10 exaflops (1019) mixed precision, and assuming one puzzle step per operation (overly optimistic). Assume further that every atom in the known universe (est. 1082) is a Frontier computer system. In this case—solving the puzzle takes order 104359 years. That’s a thousand billion billion … (repeat “billion” 484 times here) years.

As I mentioned, this is a very weak upper bound. One could solve the problem using a SAT solver with sophisticated CDCL backtracking. Or one could use special methods derived from statistical mechanics that handle random SAT instances. However, to the best of our knowledge, the runtime still grows exponentially as a function of puzzle size. The best worst-case computational complexity for SAT solvers currently known is order O∗(1.306995n) (see here, here, and here). So, simply by constructing puzzles in the way we’ve described with more and more pieces, you will soon reach a point of having a puzzle that is unfathomably difficult to solve.

It’s conceivable that a yet-undiscovered method could be found for which the solution cost would in fact grow polynomially instead of exponentially. But people have been trying to answer this question one way or the other for over 50 years and it’s still unsolved, with an open million dollar prize for proof or counterexample. So we just don’t know.

What about quantum computing? It can solve some exponential complexity problems like factoring in polynomial time. But how about the SAT problem? It is simply not known (see here, here) whether a polynomial-time quantum algorithm exists for arbitrary NP-complete problems. None are known, and it might not be possible at all (though research continues).

So we’re faced with the curious possibility: a jigsaw puzzle could be constructed for which, you’re holding the box of pieces in your hand, you spill it on the floor, and there is no single or joint intelligence or computational mechanism, existing or even possible, in the entire universe that could ever reassemble it.