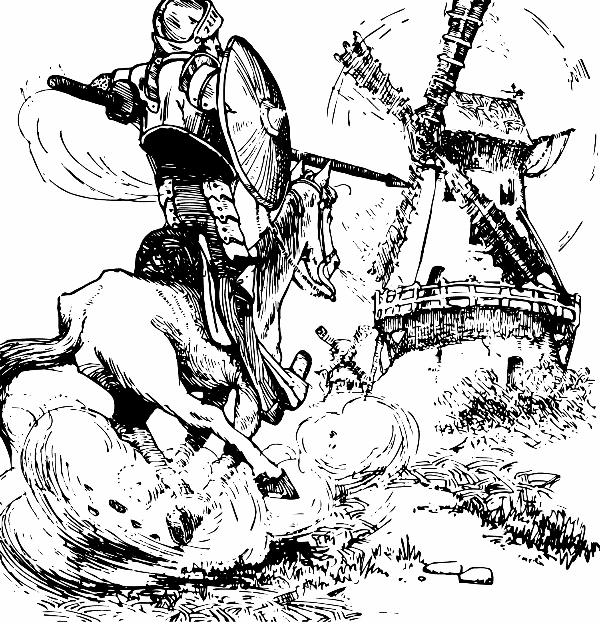

In the Broadway musical Man of La Mancha, Don Quixote sings

To dream the impossible dream

To fight the unbeatable foe

To bear with unbearable sorrow

To run where the brave dare not go

Yesterday my daughter asked me to integrate the impossible integral, and this post has a few thoughts on the quixotic quest to run where the brave calculus student dare not go.

The problem apparently required computing the indefinite integral of the square root of sine:

I say apparently for reasons that will soon be clear.

My first thought was that some sort of clever u-substitution would work. This was coming from a homework problem, so I was in the mindset of expecting every problem to be doable. If I ran across this integral while I had my consultant hat on rather than my father/tutor hat, I would have thought “It probably doesn’t have an elementary closed form because most integrals don’t.”

It turns out I should have had my professional hat on, because the integral does indeed not have an elementary closed form. There is a well-known function which is an antiderivative of √sin, but it’s not an elementary function. (“Well-known” relative to professional mathematicians, not relative to calculus students.)

You can evaluate the integral as

where E is Legendre’s “elliptic integral of the second kind.”

I assume it wasn’t actually necessary to compute the antiderivative, though I didn’t see the full problem. In the artificial world of the calculus classroom, everything works out nicely. And this is a shame.

For one thing, it creates a false impression. Most integrals are “impossible” in the sense of not having an elementary closed form. Students who go on to use calculus for anything more than artificial homework problems may incorrectly assume they’ve done something wrong when they encounter an integral in the wild.

For another, it’s a missed opportunity. Or maybe two or three missed opportunities. These may or may not be appropriate to bring up, depending on how receptive the audience is. (But we said at the beginning we would “run where the brave calculus student dare not go.”)

It’s a missed opportunity to emphasize what integrals really are, to separate meaning from calculation technique. Is the integral of √sin impossible? Not in the sense that it doesn’t exist. Yes, there are functions whose derivative is √sin, at least if we limit ourselves to a range where sine is not negative. No, the integral does not exist in the sense of a finite combination of functions a calculus student would have seen.

It’s also a missed opportunity to show that we can define new functions as the solution to problems we can’t solve otherwise. The elliptic integrals mentioned above are functions defined in terms of integrals that cannot be computed in more elementary terms. By giving the function a name, we can compare where it comes up in other contexts. For example, I wrote a few months ago about the problem of finding the length of a serpentine wall. This also pops out of a calculus problem that doesn’t have an elementary solution, and in fact it also has a solution in terms of elliptic integrals.

Finally, it’s a missed opportunity to give a glimpse of the wider mathematical landscape. If not everything elementary function has an elementary antiderivative, do they at least have antiderivatives in terms of special functions such as elliptic integrals? No, but that’s a good question.

Any continuous function has an antiderivative, and that antiderivative might be an elementary function, or it might be a combination of elementary functions and familiar special functions. Or it might be an obscure special function, something not exactly common, but something that has been named and studied before. Or it might be a perfectly valid function that hasn’t been given a name yet. Maybe it’s too specialized to deserve a name, or maybe you’ve discovered something that comes up repeatedly and deserves to be cataloged.

This touches more broadly on the subject of what functions exist versus what functions have been named. Students implicitly assume these two categories are the same. Here’s an example of the same phenomenon in probability. It also touches on the gray area between what has been named and what hasn’t, and how you decide whether something deserves to be named.

Update: The next post gives a simple approximation to the integral in this post.