Given an integer n, suppose you want to compute ⌊√n⌋, the greatest integer less than or equal to the square root of n. One reason you may want to compute ⌊√n⌋ is to know when you can stop trial division when factoring n. Similarly, ⌊√n⌋ gives you a starting point for Fermat’s factorization algorithm.

With floating point arithmetic

The obvious approach to computing ⌊√n⌋ would be to compute the square root of n as a real number and then round the result down.

Suppose we go down that route. How would we compute √n as real number? The usual way is to use Newton’s square root method. First you make an initial guess at the square root of n, say a. Then repeatedly update your guess to be the average of your previous guess a and n/a:

a ← (a + n/a) /2.

This is an ancient algorithm, going back to at least 1000 BC in Babylon. We call it Newton’s method because it is a special case of Newton’s root-finding method, applied to f(x) = x² − a.

Newton’s square root algorithm is implemented in computer hardware to this day. In some sense, we still compute square roots the same way the ancient Babylonians did.

Without floating point arithmetic

Our original problem was to find an integer, the largest integer no greater than the integer n. Can we take advantage of the fact that we’re working with integers?

A general approach to solving problems over the integers is to solve the same problem over the real numbers, then convert the result to an integer. That’s what we did above, but what if we incorporate the “integerification” into Newton’s algorithm itself so that we never work with real numbers?

This is a reasonable idea, but we don’t know a priori that it will work. Sometimes integer problems can be solved by integer analogs of real algorithms, but sometimes not. Integer optimization problems are harder than real number optimization problems because the optimal integer solution cannot in general be found by first finding the optimal real solution and rounding. And even when this approach works, it may not be the most efficient approach.

It turns out that the integer analog of Newton’s method does work. Bressoud [1] gives pseudocode for an algorithm which I write in Python below.

def sqrt_floor(n):

a = n

b = (n + 1) // 2

while b < a:

a = b

b = (a*a + n) // (2*a)

return a

Note that in Python the // operator carries out integer division, i.e. a // b computes ⌊a/b⌋.

The somewhat opaque line updating b inside the loop is just Newton’s method, averaging a and n/a, rounded down.

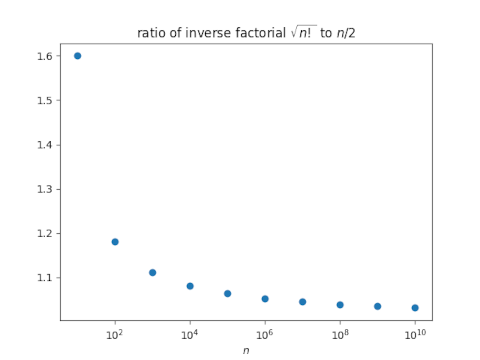

This algorithm could run on hardware with no floating point capability, only integer arithmetic. It will also work on extended precision integers where floating point would fail. For example, the following Python code

from math import factorial, sqrt

sqrt(factorial(1000))

produces an error “OverflowError: int too large to convert to float.” But

sqrt_floor(factorial(1000))

works just fine.

Update: FIPS 184-5

After writing this post, I was looking through the NIST FIPS 184-5 Digital Signature Standard (DSS). In Appendix B.4 the standard gives an algorithm for determining whether a large integer is a square. The algorithm in the appendix is essentially the algorithm above. The line that carries out the Newton method update is not explained, but this post explains where this otherwise mysterious line comes from.

[1] David M. Bressoud. Factorization and Primality Testing. Springer, 1989.