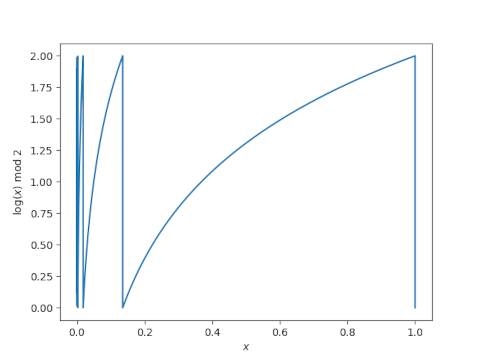

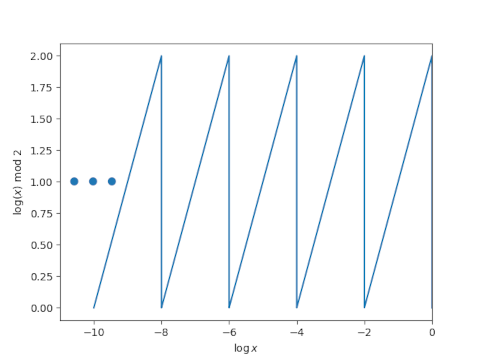

Last week I wrote about a hypothetical radio station that plays the top 100 songs in some genre, with songs being chosen randomly according to Zipf’s law. The nth most popular song is played with probability proportional to 1/n.

This post is a variation on that post looking at text consisting of the the 1,000 most common words in a language, where word frequencies follow Zipf’s law.

How many words of text would you expect to read until you’ve seen all 1000 words at least once? The math is the same as in the radio station post. The simulation code is the same too: I just changed a parameter from 100 to 1,000.

The result of a thousand simulation runs was an average of 41,246 words with a standard deviation of 8,417.

This has pedagogical implications. Say you were learning a foreign language by studying naturally occurring text with a relatively small vocabulary, such as newspaper articles. You might have to read a lot of text before you’ve seen all of the thousand most common words.

On the one hand, it’s satisfying to read natural text. And it’s good to have the most common words reinforced the most. But it might be more effective to have slightly engineered text, text that has been subtly edited to make sure common words have not been left out. Ideally this would be done with such a light touch that it isn’t noticeable, unlike heavy-handed textbook dialogs.