What does an eighteenth century French mathematician have to do with the Ethereum cryptocurrency?

A pseudorandom number generator based on Legendre symbols, known as Legendre PRF, has been proposed as part of a zero knowledge proof mechanism to demonstrate proof of custody in Ethereum 2.0. I’ll explain what each of these terms mean and include some Python code.

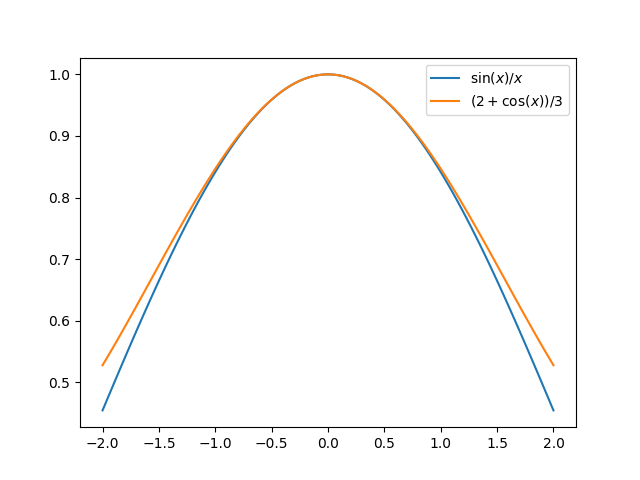

The equation x² = a mod p is solvable for some values of a and not for others. The Legendre symbol

is defined to be 1 if a has a square root mod p, and −1 if it does not. Here a is a positive integer and p is a (large) prime [1]. Note that this is not a fraction, though it looks like one.

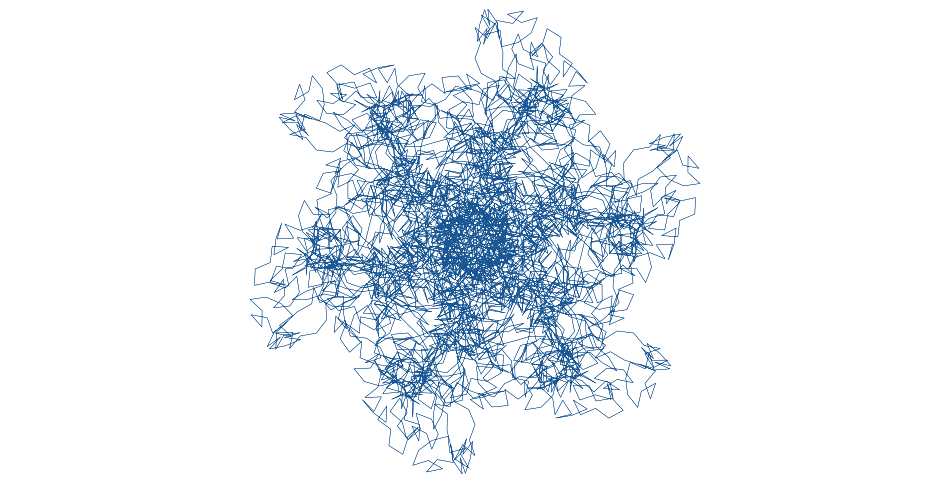

As a varies, the Legendre symbols vary randomly. Not literally randomly, of course, but the act random enough to be useful as a a pseudorandom number generator.

Legendre PRF

Generating bits by computing Legendre symbols is a lot more work than generating bits using a typical PRNG, so what makes the Legendre PRF of interest to Ethereum?

Legendre symbols can be computed fairly efficiently. You wouldn’t want to use the Legendre PRF to generate billions of random numbers for some numerical integration computation, but for a zero knowledge proof you only need to generate a few dozen bits.

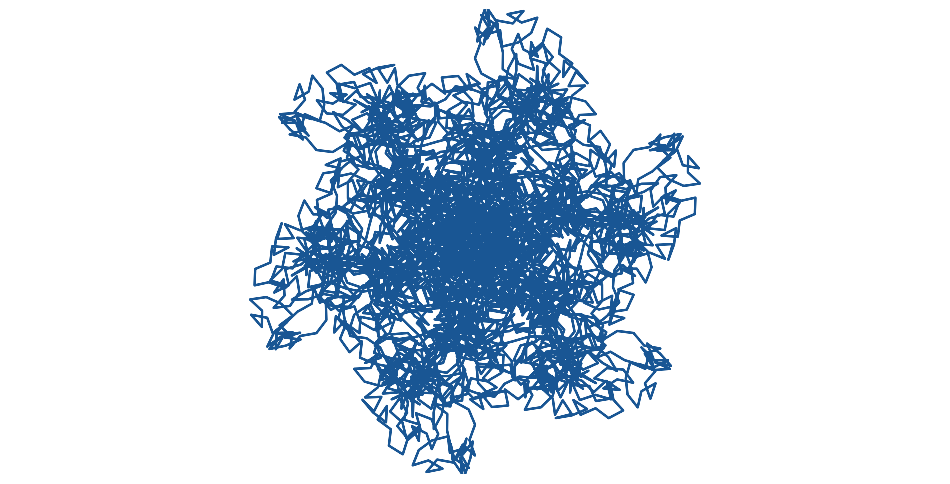

To prove that you know a key k, you can generate a string of pseudorandom bits that depend on the key. If you can do this for a few dozen bits, it’s far more likely that you know the key than that you have guessed the bits correctly. Given k, the Legendre PRF computes

for n consecutive values of x [2].

One reason this function is of interest is that it naturally lends itself to multiparty computation (MPC). The various parties could divide up the range of x values that each will compute.

The Legendre PRF has not been accepted to be part of Ethereum 2.o. It’s only been proposed, and there is active research into whether it is secure enough.

Python code

Here’s a little Python scrypt to demonstrate using Lk(x).

from sympy import legendre_symbol

def L(x, k, p):

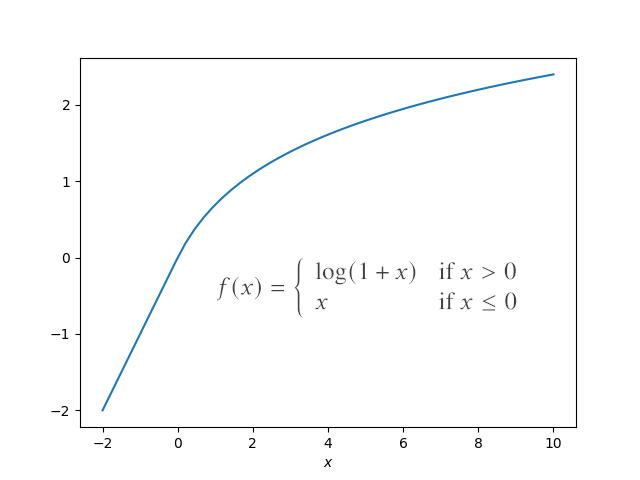

return (1 - legendre_symbol(x + k, p)) // 2

k = 20250626

p = 2**521 - 1

print([L(x, k, p) for x in range(10)])

This produces the following.

[1, 1, 1, 1, 0, 0, 1, 0, 0, 1]

Related posts

[1] There is a third possibility: the Legendre symbol equals 0 if a is a multiple of p. We can safely ignore this possibility for this post.

[2] The Legendre symbol takes on the values ±1 and so we subtract this from 1 to get values {0, 2}, then divide by 2 to get bits {0, 1}.