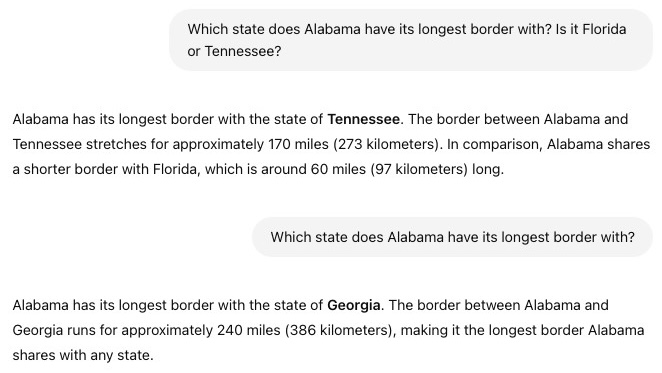

Anyone with more than casual experience with ChatGPT knows that prompt engineering is a thing. Minor or even trivial changes in a chatbot prompt can have significant effects, sometimes even dramatic ones, on the output [1]. For simple requests it may not make much difference, but for detailed requests it could matter a lot.

Industry leaders said they thought this would be a temporary limitation. But we are now a year and a half into the GPT-4 era, and it’s still a problem. And since the number of possible prompts has scaling that is exponential in the prompt length, it can sometimes be hard to find a good prompt given the task.

One proposed solution is to use search procedures to automate the prompt optimization / prompt refinement process. Given a base large language model (LLM) and an input (a prompt specification, commonly with a set of prompt/answer pair samples for training), a search algorithm seeks the best form of a prompt to use to elicit the desired answer from the LLM.

This approach is sometimes touted [2] as a possible solution to the problem. However, it is not without limitations.

A main one is cost. With this approach, one search for a good prompt can take many, many trial-and-error invocations of the LLM, with cost measured in dollars compared to the fraction of a cent cost of a single token of a single prompt. I know of one report of someone who does LLM prompting with such a tool full time for his job, at cost of about $1,000/month (though, for certain kinds of task, one might alternatively seek a good prompt “template” and reuse that across many near-identical queries, to save costs).

This being said, it would seem that for now (depending on budget) our best option for difficult prompting problems is to use search-based prompt refinement methods. Various new tools have come out recently (for example, [3-6]). The following is a report on some of my (very preliminary) experiences with a couple of these tools.

PromptAgent

The first is PromptAgent [5]. It’s a research code available on GitHub. The method is based on Monte Carlo tree search (MCTS), which tries out multiple chains of modification of a seed prompt and pursues the most promising. MCTS can be a powerful method, being part of the AlphaGo breakthrough result in 2016.

I ran one of the PromptAgent test problems using GPT-4/GPT-3.5 and interrupted it after it rang up a couple of dollars in charges. Looking at the logs, I was somewhat amazed that it generated long detailed prompts that included instructions to the model for what to pay close attention to, what to look out for, and what mistakes to avoid—presumably based on inspecting previous trial prompts generated by the code.

Unfortunately, PromptAgent is a research code and not fully productized, so it would take some work to adapt to a specific user problem.

DSPy

DSPy [6] on the other hand is a finished product available for general users. DSPy is getting some attention lately not only as a prompt optimizer but also more generally as a tool for orchestrating multiple LLMs as agents. There is not much by way of simple examples for how to use the code. The website does have an AI chatbot that can generate sample code, but the code it generated for me required significant work to get it to behave properly.

I ran with the MIPRO optimizer which is most well-suited to prompt optimization. My experience with running the code was that it generated many random prompt variations but did not do in-depth prompt modifications like PromptAgent. PromptAgent does one thing, prompt refinement, and must do it well, unlike DSPy which has multiple uses. DSPy would be well-served to have implemented more powerful prompt refinement algorithms.

Conclusion

I would wholeheartedly agree that it doesn’t seem right for an LLM would be so dependent on the wording of a prompt. Hopefully, future LLMs, with training on more data and other improvements, will do a better job without need for such lengthy trial-and-error processes.

References

[1] “Quantifying Language Models’ Sensitivity to Spurious Features in Prompt Design or: How I learned to start worrying about prompt formatting,” https://openreview.net/forum?id=RIu5lyNXjT

[2] “AI Prompt Engineering Is Dead” (https://spectrum.ieee.org/prompt-engineering-is-dead, March 6, 2024

[3] “Evoke: Evoking Critical Thinking Abilities in LLMs via Reviewer-Author Prompt Editing,” https://openreview.net/forum?id=OXv0zQ1umU

[4] “Large Language Models as Optimizers,” https://openreview.net/forum?id=Bb4VGOWELI

[5] “PromptAgent: Strategic Planning with Language Models Enables Expert-level Prompt Optimization,” https://openreview.net/forum?id=22pyNMuIoa

[6] “DSPy: Compiling Declarative Language Model Calls into State-of-the-Art Pipelines,” https://openreview.net/forum?id=sY5N0zY5Od

I think it is entirely in keeping with how these models work that the details of the prompt are critical. The models can do many amazing things, but they don’t think and don’t and can’t know what you mean the way that a person can or some hypothetical AGI could.

Hi John,

What is the point of prompt optimization? You know the correct answer and want your prompt trigger the correct answer?

Why then ask the machine in the first place?

Put another way: what is the target of the optimization?

Cheers

Harald

@Harald, prompting when you know the answer is good for evaluation. How well the system performs when you do know the answer gives you an idea how much confidence to have to questions when you don’t know the answer. c.f. Gell-Mann amnesia.

Sometimes you may not know the answer a priori but you’re able to evaluate the answer. For example, factoring is hard, but multiplication is easy. Maybe a number is too big for you to factor, but you can tell whether a supposed factorization is correct.

In that spirit, you might tweak a prompt until a system gives you a verifiably correct answer.

In prompt optimization, a lot of times people are looking for, not just a single prompt to get a single piece of information, but more of a “prompt style” or “prompt instructions” that can be used across many prompts. For example, it’s been found that for many user queries, adding the instructions “Please think step by step and show your work” greatly helps improve response accuracy. So, in practice one might train on many known correct question/answer pairs to discover a way of prompting that works for many similar cases for which you don’t have a correct answer. Hope this makes sense —