C. A. R. Hoare wrote an article How Did Software Get So Reliable Without Proof? in 1996 that still sounds contemporary for the most part.

In the 1980s many believed that programs could not get much bigger unless we started using formal proof methods. The argument was that bugs are fairly common, and that each bug has the potential to bring a system down. Therefore the only way to build much larger systems was to rely on formal methods to catch bugs. And yet programs continued to get larger and formal methods never caught on. Hoare asks

Why have twenty years of pessimistic predictions been falsified?

Another twenty years later we can ask the same question. Systems have gotten far larger, and formal methods have not become common. Formal methods are used—more on that shortly—but have not become common.

Better in practice than in theory

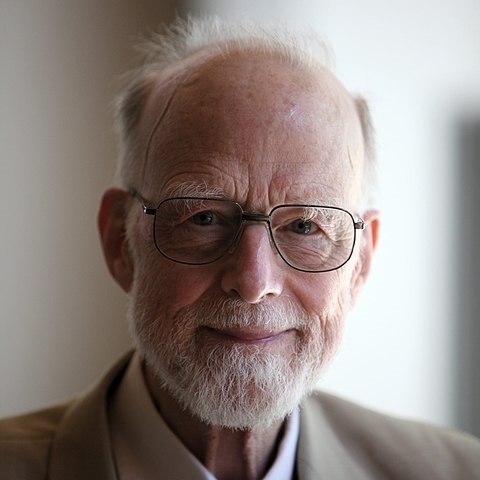

It’s interesting that Hoare was the one to write this paper. He is best known for the quicksort, a sorting algorithm that works better in practice than in theory! Quicksort is commonly used in practice, even though has terrible worst-case efficiency, because its average efficiency has optimal asymptotic order [1], and in practice it works better than other algorithms with the same asymptotic order.

Economic considerations

It is logically possible that the smallest bug could bring down a system. And there have been examples, such as the Mars Climate Orbiter, where a single bug did in fact lead to complete failure. But this is rare. Most bugs are inconsequential.

Some will object “How can you be so blasé about bugs? A bug crashed a $300 million probe!” But what is the realistic alternative? Would spending an additional billion dollars on formal software verification have prevented the crash? Possibly, though not certainly, and the same money could send three more missions to Mars. (More along these lines here.)

It’s all a matter of economics. Formal verification is extremely tedious and expensive. The expense is worth it in some settings and not in others. The software that runs pacemakers is more critical than the software that runs a video game. For most software development, less formal methods have proved more cost effective at achieving acceptable quality: code reviews, unit tests, integration testing, etc.

Formal verification

I have some experience with formal software verification, including formal methods software used by NASA. When someone says that software has been formally verified, there’s an implicit disclaimer. It’s usually the algorithms have been formally verified, not the implementation of those algorithms in software. Also, maybe not all the algorithms have been verified, but say 90%, the remaining 10% being too difficult to verify. In any case, formally verified software can and has failed. Formal verification greatly reduces the probability of encountering a bug, but it does not reduce the probability to zero.

There has been a small resurgence of interest in formal methods since Hoare wrote his paper. And again, it’s all about economics. Theorem proving technology has improved over the last 20 years. And software is being used in contexts where the consequences of failure are high. But for most software, the most economical way to achieve acceptable quality is not through theorem proving.

There are also degrees of formality. Full theorem proving is extraordinarily tedious. If I remember correctly, one research group said that they could formally verify about one page of a mathematics textbook per man-week. But there’s a continuum between full formality and no formality. For example, you could have formal assurance that your software satisfies certain conditions, even if you can’t formally prove that the software is completely correct. Where you want to be along this continuum of formality is again a matter of economics. It depends on the probability and consequences of errors, and the cost of reducing these probabilities.

Related posts

[1] The worst-case performance of quicksort is O(n²) but the average performance is O(n log n).

Photograph of C. A. R. Hoare by Rama, Wikimedia Commons, Cc-by-sa-2.0-fr, CC BY-SA 2.0 fr

The other reason that formal verification has never taken off, often forgotten, is that it assumes fully specified, fixed requirements at the start of development. (What else could it be verified against?)

In over two decades of software development, I have never, ever, ever, ever, ever seen a project with completely fixed and specified requirements at the start of development.

In fact, the norm is for requirements to shift to some extent or another to the very end of development.

For this reason, test-driven development is the more practical way to do verification.

Marc, similar experience. I’ve never seen fixed requirements except for small components. When I managed software development projects, we never had fixed requirements. Seldom even written requirements.

Now that I’m a consultant, sometimes I do write small components that are fully specified. But that is far from typical software development.

I have always thought that computer scientists drove themselves into a dead end over proof. Mathematicians mean something much less formal by the word proof, roughly an argument that will convince someone else you are right. When I did a lot of programming I used to prove my code right in this sense (and the comments drew out the main limits of the proof). This can be quicker than extensive testing, and better, as it can sometimes be hard to construct test cases that reach obscure corners of the code .

The Shape of Code blog has some fun reads on this topic:

https://shape-of-code.coding-guidelines.com/2018/02/19/mathematical-proofs-contain-faults-just-like-software/

http://shape-of-code.coding-guidelines.com/tag/formal-methods/

Hi, John! I can’t believe you missed the opportunity to include this quote from Knuth: “Beware of bugs in the above code. I have only proved it correct, not tried it.”

The Government of Canada is dealing with the fallout of the poor rollout of their new Phoenix Pay System. If what your article is saying, and the above comments show, without going through a formal options assessment and setting out clear parameters for the product, many systems will be plagued with problems. The economics of the Phoenix Pay System debacle illustrate the need to project specifications that set out the project requirements and are adhered to; and only changed once the entire project team has discussed and vetted any changes.

Oh, and I am not a programmer. I am however, an engineer responsible for multi-million dollar projects that quickly go sideways when issues arise and are not properly mitigated.

There exist automated and semi-automated software verification tools that prove that the code is correct (according to the programming language specification).

One such tool is Frama-C with Jessie plugin (for formal proof of C programs). But you need to state your assumptions explicitly, using ACSL (ANSI/ISO C Specification Language) – in a comment for the function to be proven. The tool transforms ACSL + code into math to be proven.

Similar tools exist for Java.

Interesting that this is next to your post about power law distribution of passwords. I imagine the reason why no one cares about formal verification is that bugs themselves follow a power law. Perhaps in the C++ realm, memory corruptions are most common, race conditions 1/10th as common as memory corruptions, parser bugs 1/10, failure to exhaustively match all cases . . .

Well, we have tools for dealing with these common bugs: AddressSanitizer, UndefinedBehaviorSanitizer, fuzz testing, static analysis.

Nobody needs to remove *all* their bugs, they only need to remove the ones that get hit. If bugs were uniformly distributed over all classes of bugs, I imagine formal verification would be useful.

Yes, it’s all about economics.

A characteristic of formal proofs is that they are very difficult to change, i.e., to reflect changes in the requirements. My experience is that maintenance of a formal proof is much higher than maintaining code (formal proofs tend to be edifices that collapse when touched). There may be ways of writing proofs that are less costly to update, but researchers seem more interest in proving things than making it easier to change proofs.

The other problem with formal methods is that those involved behave like snake oil salesmen, with grandiose claims that have no connection to what they have actually proved. So they get laughed at and ignored. The field needs to sort out its intellectual dishonesty issues.

http://shape-of-code.coding-guidelines.com/2013/03/10/verified-compilers-and-soap-powder-advertising/

Derek: Nice post on verified compilers.

It seems that as software becomes more and more about machine learning (ML), that the landscape around formal proofs will change. Given the dynamic nature of the ‘algorithms’ in a neural net or other ML system, I am not sure how one would proceed with a traditional approach to an algorithm proof. I hear talk about AI/ML systems such as, “we don’t really know what the algorithm is in current systems like AlphaGoZero”, or “at some point in the future, we will have no hope of understanding sufficiently advanced AI”. Where will formal software proofs be then?