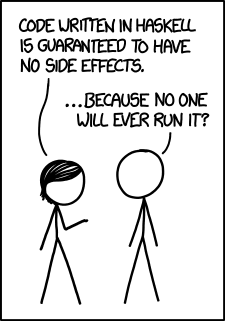

I’m reading Real World Haskell because one of my clients’ projects is written in Haskell. Some would say that “real world Haskell” is an oxymoron because Haskell isn’t used in the real world, as illustrated by a recent xkcd cartoon.

It’s true that Haskell accounts for a tiny portion of the world’s commercial software and that the language is more popular in research. (There would be no need to put “real world” in the title of a book on PHP, for example. You won’t find a lot of computer science researchers using PHP for its elegance and nice theoretical properties.) But people do use Haskell on real projects, particularly when correctness is a high priority.[1] In any case, Haskell is “real world” for me since one of my clients uses it. As I wrote about before, applied is in the eye of the client.

I’m not that far into Real World Haskell yet, but so far it’s just what I was looking for. Another book I’d recommend is Graham Hutton’s Programming in Haskell. It makes a good introduction to Haskell because it’s small (184 pages) and focused on the core of the language, not so much on “real world” complications.

A very popular introduction to Haskell is Learn You a Haskell for Great Good. I have mixed feelings about that one. It explains most things clearly and the informal tone makes it easy to read, but the humor becomes annoying after a while. It also introduces some non-essential features of the language up front that could wait until later or be left out of an introductory book.

* * *

[1] Everyone would say that it’s important for their software to be correct. But in practice, correctness isn’t always the highest priority, nor should it be necessarily. As the probability of error approaches zero, the cost of development approaches infinity. You have to decide what probability of error is acceptable given the consequences of the errors.

It’s more important that the software embedded in a pacemaker be correct than the software that serves up this blog. My blog fails occasionally, but I wouldn’t spend $10,000 to cut the error rate in half. Someone writing pacemaker software would jump at the chance to reduce the probability of error so much for so little money.

On a related note, see Maybe NASA could use some buggy software.

‘From 1997 to 2003, at least 212 deaths resulted from defects in five different brands of defibrillators.’

http://williamedwardscoder.tumblr.com/post/22833490643/is-an-open-source-pacemaker-safer-than-closed-source

There is a question about open source as well. Some might view it as a risk to a companies business model but if it also reduces error rates is that something to include in a cost benefit analysis?

Sometimes open source software is more reliable because it has more developers looking at it. But sometimes commercial software is more reliable because it has more users. More users means more people to report bugs, and more financial resources to fix those bugs. Popular software is tested by more people under more circumstances. It’s great when software is open source and popular, but often you can’t have both.

Medical devices are an unusual case since the consequences of bugs are potentially dire. Also, someone who is on the receiving end of a defibrillator isn’t a “user” in the same sense as someone using a word processor.

Thanks — learning Haskell is going to be one of my summer projects and this might be one of the resources I use.

Even in Haskell there are plenty of ways to subvert the type system (unsafePerformIO, Coerce) to tell the type system “No, believe me, really!” In some ways this is an escape hatch for programmers to get things done in the real world, although it also introduces potential for unsoundness. I think the key is still using good software practices to reduce the potential for errors.

I think Hutton’s book is the best introduction to Haskell, especially when backed up by Erik Meijer’s videos: http://channel9.msdn.com/Series/C9-Lectures-Erik-Meijer-Functional-Programming-Fundamentals

Jon, I think there’s a huge difference between facilities for subverting the type system on purpose and facilities which let you subvert the type system by accident. As the C++ folks are fond of saying, protecting against Murphy isn’t the same as protecting against Machiavelli.

Having said that, clearly neither should be allowed if security is important (e.g. Java sandboxing). There’s a place for both perspectives in programming language design.

Pseudonym – I agree, although I maintain that one person’s well-meaning but incorrect intuition, lack of experience, or lack of judgment, may not be so good taken in a different context, and this effect is multiplied in team environments or with usage of third-party libraries. Of course, you’ll be better off if you restrict yourself to the ‘blessed’ packages.

This is my favourite introductory resource for Haskell:

http://www.seas.upenn.edu/~cis194/lectures.html

It’s pitched at a similar level to Learn You A Haskell and Real World Haskell, but it has awesome exercises and it fairly well tuned to modern Haskell idioms.

Take a look on the new book “Beginning Haskell: A Project-Based Approach” by Alejandro Serrano. This book is really good, much better then RWH. Which btw is a little outdated. “Beginning Haskell” is like LYH mixed with RWH, “Parallel and Concurrent Programming in Haskell” and a good selection of online articles.

Jon – if you use Safe Haskell (http://www.haskell.org/ghc/docs/latest/html/users_guide/safe-haskell.html) you can have fatal compilation errors if you try and subvert the type system in this way.

What you’re saying is ancient and proven engineering wisdom. However, it just happens not to be true.

There are a number of compilers (and operating systems) with machine-checked formal proofs of correctness, such as the CompCert C compiler and the CakeML ML compiler. The development effort involved was a few times the effort that a non-provably-correct compiler would be, but it’s obviously not infinitely more expensive — both of these were built in a year or two by a small team of academic researchers.

I’m curious as to what features you think LYAH brings up too early. It’s been a while since I read it, but it’s what I usually recommend to people interested in Haskell.

That particular xkcd was a rather tongue-in-cheek jab at davean, the guy who does all the infrastructure for xkcd… in Haskell.

An example is http://www.reddit.com/r/haskell/comments/uved7/waldo_the_haskell_powered_codebase_behind_xkcds/ but it really composes about 75% of the code they have in house.

My usual recommendation is to binge through Real World Haskell and Learn You a Haskell in roughly equal measures. The former is good at getting you up to speed on spitting out nuts and bolts, the latter is better at exploring the ‘feel’ and joy of Haskell development, but is rather too fluffy to take you far.

A third book I’d add to your pile is Parallel and Concurrent Programming in Haskell by Simon Marlow. It goes a long way from taking Haskell from being ‘just another language to program in that happens to be lazy’ to an indispensible part of your parallel programming toolbox.

Another book I’d recommend is Pearls of Functional Algorithm Design for Richard Bird. It is an amazing foray into how to really “think” as a Haskeller and dives into a lot of diverse problems an solves them via equational reasoning.

You can think of those 2 books as more grown-up versions of RWH and LYAH respectively.

Neel: Some ways of improving the probability of correctness are more economical than others, and formal verification is cost-effective in some circumstances.

The probability that a “provably correct” piece of software is not perfectly correct is greater than zero. It may be very small, but it’s possible to make errors in the formal verification process.

John: Sure, when we prove something correct in the software sphere it is always up to some level of abstraction.

Whenever you type check you are proving free theorems about your code.

If I write something in Scheme, I have very no confidence that the types will save me. If I write something in Java, I have somewhat more, but still not enough, as if I pass around arrays Gosling decided to let me break the type system. If I write something in Scala free theorems have no teeth. But if I write it in Haskell, at least I get free theorems, but almost everything I can say is limited by the fact that I’m in a CPO and bottoms inhabit every type.

If I were to write it in Agda, I’d get more guarantees, but only up to whatever ad hoc extensions they’ve added to the core type theory today. If I wanted to write it in Coq, I probably have to go learn French. ;)

When people say well typed programs in Haskell don’t go wrong they are noting that the free theorems you get from the process of type-checking code in Haskell rules out a class of programs that is highly correlated to the kinds of bad programs people write in practice.

We use quickcheck, doctest, test-framework, etc. to improve the tightness of that fit, and other people often use bindings to SAT solvers and code extraction from Coq or Agda to increase reliability guarantees for those mission critical bits of software.

The usual model for something that is stuck hard realtime isn’t to write it directly in Haskell, but rather to write the Haskell code that can generate what needs to run on the device, using something like atom or copilot or SBV to “prove” it correctly enough.

Edward: I’m very interested in formal verification methods. I was excited by the idea when I first saw it in college, but quickly grew disillusioned. Now things seemed to have matured to the point that formal verification is practical for a wider class of problems, and I’m starting to look into Coq.

I also grew disillusioned with them. I was first introduced to formal methods through more of a Hoare logic approach, and the idea that that was what I’d have to do to prove my code correct was galling to me, even when I didn’t know better. =)

A few rambling over generalizations:

Agda in my experience tends to be very brittle. Small changes in the code results in large changes in the scaffolding proofs. So if you like the refactor often it can be a pain. This is a consequence of the proof terms you write literally being the code you run.

Coq on the other hand is much more robust in the presence of changes as you don’t write out proofs directly, but instead hint with a series of tactics. This set of tactical hints can survive refactorings, whereas the Agda approach implodes. The downside of this is that the Agda code was often much more legible, as a wall of tactical hints isn’t good reading, and because Coq has such a strong tactics system their code is rarely anywhere near as modular and clean as Agda code.

For me, the reason I mostly write Haskell these days is it sits at a good sweet spot that is both formally correct enough to reason about and flexible enough that changing the program around doesn’t require lot of scaffolds shearing off.

I find that the solution when I don’t know if a piece of code is correct in Haskell is to just keep increasing generality.

The more parametric the function the stronger the free theorem I am asking the compiler to prove about it every time I compile.

The nice side effect of this for me is that the code I get out of it is instantly more reusable as the lines along which parametricity required me to generalize the code is highly correlated with the kinds of places I was likely going to have to generalize later on.

With Agda or Coq though you wind up in scenarios where you have code that is _almost_ the same, but for different ornamentations. The number of structures that collapse down to the same thing in Haskell explode back outwards again.

I’m using Haskell in a real world projects for a while. And for me the main selling point of Haskell is not correctness.

Haskell gives the optimal performance-effort ratio. I can quickly write very simple and compact code and it usually performs very well. It’s like programming in Python while getting performance of C. But it’s actually more compact than Python, sometimes much more performant than C and easier to maintain and refactor.

An interesting concept is software is valued not on the number of defects but of the value it provides. That is difficult for a lot of people involved.

Also a comment about medical software, it is extremely rare that the developers can ever actually use the software they develop. I was thinking about TurboTax recently and it occurred to me that almost all Intuit employees can use at least some part of the software. While someone that writes a pharmacy dispensing system will never use any of it. That makes it very difficult for the programmers to have any deep feel for the actual end use, they rely almost 100% on clinical people describing what the software should do.

John

I think if anyone wants to talk about the limitations of functional program for “real world” applications, they need to address the serious challenge to that opinion offered by OCAML use at Jane Street Capital. See:

https://vimeo.com/14317442

https://ocaml.janestreet.com/?q=blog/5

https://ocaml.janestreet.com/

Essentially, Jane Street can’t afford to have software that fails because it spends millions in seconds.

A particularly nice thing about Real World Haskell is the way the free online version works.

I have the paper version and prefer to read that, but the online version is effectively structured like a web forum with a comments section for every paragraph. As many of the prior readers have encountered the same problems as me, it makes for a remarkably useful supplement to the book.