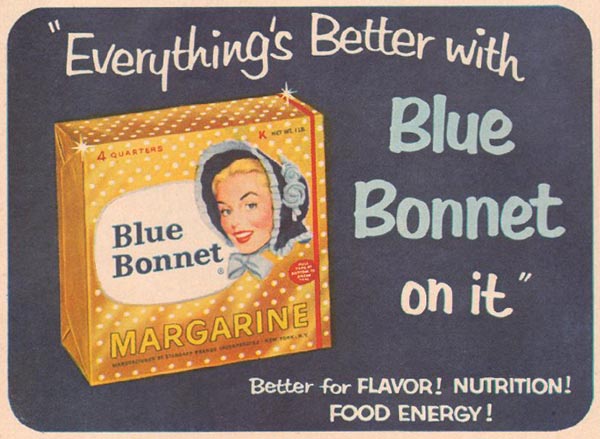

Blue Bonnet™ used to run commercials with the jingle “Everything’s better with Blue Bonnet on it.” Maybe they still do.

Perhaps in reaction to knee-jerk antipathy toward Bayesian methods, some statisticians have adopted knee-jerk enthusiasm for Bayesian methods. Everything’s better with Bayesian analysis on it. Bayes makes it better, like a little dab of margarine on a dry piece of bread.

There’s much that I prefer about the Bayesian approach to statistics. Sometimes it’s the only way to go. But Bayes-for-the-sake-of-Bayes can expend a great deal of effort, by human and computer, to arrive at a conclusion that could have been reached far more easily by other means.

Related: Bayes isn’t magic

I’m seeing this a lot, and I think part of what’s driving it is the idea that if you’re using Bayesian methods, you either don’t have to worry about whether frequentist assumptions have been violated, or for some reason need to worry less. Take a look at a package released for R by google called “CausalImpact,” a Bayesian tool they use as proof of the effectiveness of advertising campaigns to customers. I know a little something about time series analysis. When I saw the claims about CausalImpact, I was skeptical but thought wow, if that really works… A little bit of testing showed that it choked quickly on time series with auto-regressive or unstable variance components. Those aren’t idnentified by the authors as assumptions of the model, but they’re a big reason it’s hard to get strong conclusions or predictive value from many analyses of time series using frequentist methods. The Bayesian tool was producing results similar to what a naive frequentist tool would produce if you imagined-away violations of assumptions about the series. My hypothesis is that the Bayesian tool isnt any better (although some Bayesian tools are surely better for some problems), but merely less well understood.

Do you happen to have any specific or widespread examples of this?

@Christian: Yes, though I’d rather not go into specifics here.

I am just a begginner and I have been taught that Bayesian methods generalize the frequentist methods, in the sense that any conclusion drawn on a frequentist basis would also be drawn on a Bayesian. I have the impression that Bayesian methods are like a bazooka: if you do not know the size of your enemy, a bazooka will likely kill it. It worths the effort if the enemy is a dragonsaur, otherwise it may be a huge waste of resources if it is only a tiny fly.

I would not say that Bayesian methods generalize frequentist methods. Sometimes the two approaches reach substantially different conclusions, though often they converge to the same limit asymptotically.

The generalization I meant is that frequentist methods correspond to Bayesian methods when one use an uniformative prior. Perhaps it is not suitable to make such a strong assertion and the person who stated this to me may have incurred a great sin. I already knew that the conclusions drawn from both approaches may be in theory very different, particularly in case one has a small sample.

Sure, there are problems which are simply difficult, and unapproachable. For example, Christian Robert and colleagues have done work on mixture modeling which, in a recent blog post identifying both a book he co-edited and independent work by Rodriguez and Walker on the question. Sure, in the general case, the components are not identifiable. But, if, using application knowledge, if the components are known to be substantially different in mean or variance or other characteristic, or if some of the tricks from Bayesian non-parametrics are appropriate, the problem can succumb. It also depends how patient the student wants to be for an answer. JAGS, for instance, has a mixture modeling module, but it is very expensive.

“Bayes”, I think, means more than “non-frequentist”. There’s a famous letter (from 1971!) by I. J. Good written to The American Statistician called “46656 Varieties of Bayesians”, followed by Kass’ piece in 2006. Apart from embracing the idea that statistical parameters are random variables, there are, as I’m sure everyone would agree, a lot of ways to go. Practicing Bayesians are, more often, I think, than not slightly empirically Bayesian, for the line between prior knowledge of the field and prior data is not always so easy to draw. This is despite such practice being roundly condemned by some Bayesian saints, who are worthy of deep respect for their many contributions against strong headwinds.

Bayes doesn’t displace frequentist method as much as subsumes it. There’s a lot of frequentist knowledge used in building sampling distributions. It’s just that the Bayesian student isn’t married to a particular distribution realizing that, in reality, most are multimodal, and it’s always important to put the problem space first, not the niftiest solution on hand.

There’s also an evolving school of practice which and where the computational methods of modern Bayesian inference are seen as tools for stochastic search and optimization (e.g., as in James Spall’s book on the subject), quite separate from their Bayesian underpinnings.

There’s also a set of problems which are highly decision oriented for which few data are available, either one-off cases, or decisions which need to be made time and time again. In the frequentist world, at least in numerical linear algebra, some of these were approached using so-called minimum norm solutions, where many more parameters than data were available. Bayesian methods offer another means of dealing with these, as long as the extra parameters can be integrated away.

And, yes, there is the overall question of when can a student know that an MCMC chain has indeed converged. We have tests, we have rules of thumb, we have lots of tricks of the trade, but, as in most statistics, it’s a matter of knowing the problem, as I’m sure everyone will agree.

In my experience, the most powerful reason to “go Bayes” is that any problem, then, is never “finished”. With additional data, perhaps of a vastly different kind (some categorical in addition to the continuous time series that began the quest, for instance), the problem can be re-opened, and it’s known how to proceed. There are many ways to proceed, yes, as any examination of Lunn, Jackson, Best, Thomas, and Spiegelhalter or of the several valuable books by Congdon will attest. But my personal abhorrence of purely frequentist styles in the aftermath of the Bayesian computational revolution is that these treatments tend to be like virtuoso performances, like beautiful crystals, built once and pretending to be For The Ages and The Last Word. No real problem is like that.

And, when some problems resist, yes, students need the humility to say, “It can’t be done right now.”

Pardon if I wax a bit controversial, but a good discussion like this is great for the statistical soul.